The FDA admits they’re struggling to keep up

When it comes to the ethics of medical technology, one of the best places to look for guidance is in the history books. Decades ago, the pioneers of generative AI predicted the technology would develop to the level it has today. And some of those same pioneers warned against allowing AI to act as a healthcare provider.

In the 1960s, artificial intelligence pioneer Joseph Weizenbaum created one of the first-ever generative chatbots: a program named ELIZA, after the protagonist of My Fair Lady. Like some of the mental health applications of generative AI today, ELIZA was designed to sound like a therapist. However, far from asserting AI would soon replace human practitioners, Weizenbaum contended ELIZA was a “party trick.” In his eyes, an AI chatbot like this could never function like a therapist because it couldn’t experience human emotion and empathy.

However, today, we’re getting closer than ever to the applications Weizenbaum warned against. Tech pundits—like Sun Microsystems co-founder Vinod Khosla—predict that, within the decade, the FDA will approve an AI-enabled digital health app to practice medicine like your primary care provider. Clearly, though we’ve been confronting these questions for decades, now is the time to make key decisions that’ll determine the future of our industry. But who should be the ones to lead the way in setting those ethical standards? Who will determine medical AI’s checks and balances?

One option is the digital health industry itself. We recently conducted Pulse Check interview we conducted with the CEO of mental health AI startup thymia, Dr. Emilia Molimpakis. Dr. Molimpakis shared with us that she sees her most important responsibility as a founder in this space is leadership in the ethics of medical AI. Indeed, as we venture into uncharted waters, the innovator pioneers will be those we’ll need to lead by example.

However, innovators aren’t regulators. And they’re certainly not enforcers. That’s why stakeholders from the WHO to generative AI leaders like OpenAI CEO Sam Altman are calling on lawmakers to regulate the technology—and quickly. And regulators are taking action, holding hearings, and drafting regulatory standards. But at the same time, with the accelerated rate of technological innovation in medicine, regulators are struggling to keep up.

And what about patients? In our Chart of the Week this edition, we see that many clinicians across the globe are concerned about the way future digital technologies may exacerbate existing health inequalities among patients. Patient advocates are already speaking out about how medical innovators can design products to better serve them—and not just certain subsets of the population. I’m optimistic that their voices will be pivotal in the medical AI conversation.

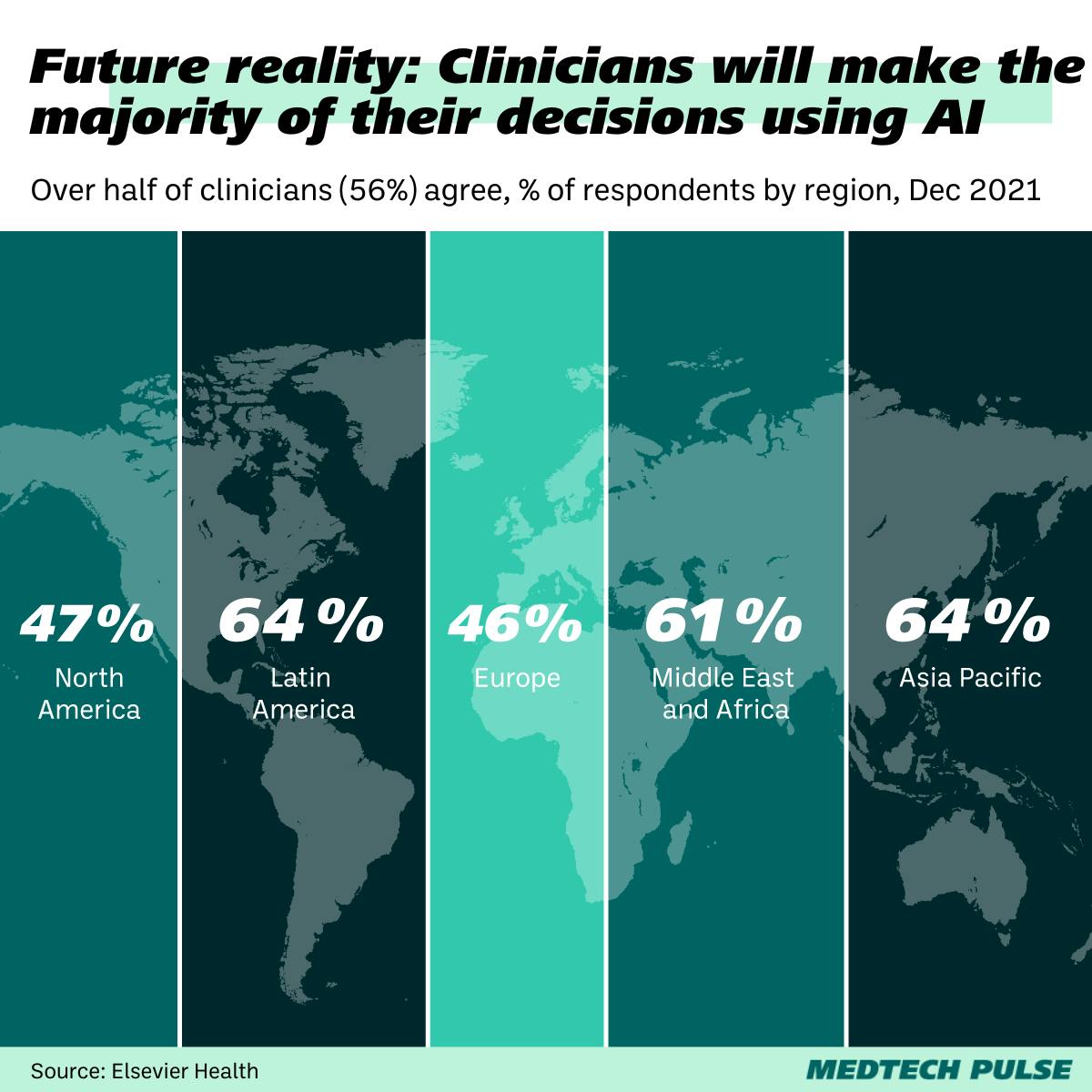

Ultimately, medical AI development is accelerating. Clinicians around the world already agree: AI will, at the very least, change the landscape of clinical decision support tools in the near future.

It’s hard to imagine we can stop this acceleration. Nor do we want to! You’ll see in our lead article today how excited I am about the fantastic ways AI is making radiology more efficient, accurate, and effective. Instead, we must step up from all of our respective roles—whether as executives, researchers, or patient advocates—and educate ourselves on this technology, so that we can speak up for the path we wish to see this technology take.